Root-Project: Keras Parser for SOFIE

Translating a .h5 file to SOFIE's RModel

SOFIE was ideated as an inference engine that will be very fast in generating easy-to-use inference code and will have very few dependencies in predicting from a trained machine learning model in any production environment. SOFIE follows the ONNX standards for storing model configuration thus containing the initialized tensors, intermediate tensors, input & output tensors, and the Operators, operating on the tensors to return the required prediction.

Keras models are convertible to the .onnx format which are then used for inference in the ONNX runtime, and TMVA supporting the development of Keras models, thus it was necessary to build converters to parse a saved Keras .h5 file into an RModel object.

In this blog of my GSoC'21 with CERN-HSF series, I describe my work in building the Keras parser which can be effectively used for converting any Keras .h5 file into the RModel object.

Table of Contents

1. RModel & ROperators

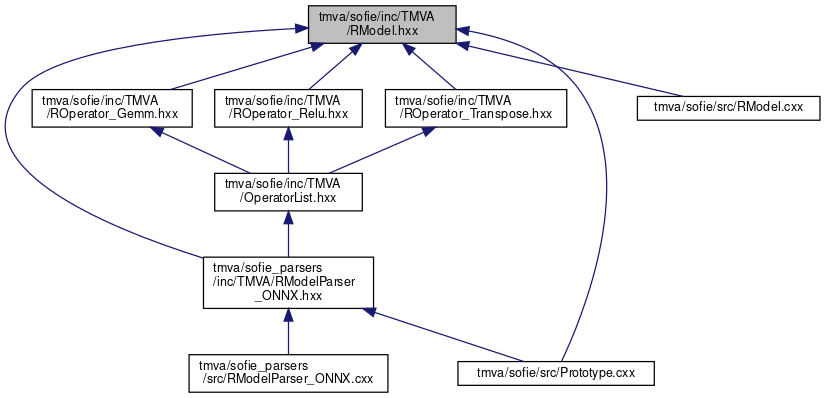

RModel is the primary data structure used to hold the model configuration of any deep learning model. Inspired by ONNX, RModel follows a similar data structure format.

For instantiating an RModel object, we execute the following steps.

- Add the operators along with the names of the input & output tensors, data types, and any other attributes if required.

- Add the input tensor names, their data types, and shapes.

- Add the data types, shapes, and values (weights) of Initialized Tensors.

- Add the output-tensor names of the model.

After executing these steps, the Generate() is called. The intermediate tensors are initialized and the inference code is generated by layering the input tensors, initialized tensors, and the intermediate tensors with the operators to map them with the provided output tensors. Checks are provided to verify the correctness of the provided shapes, data types, and operators for their sanctity.

Thus, with the completion of this, now the RModel object holds all the information about the model configuration and weights.

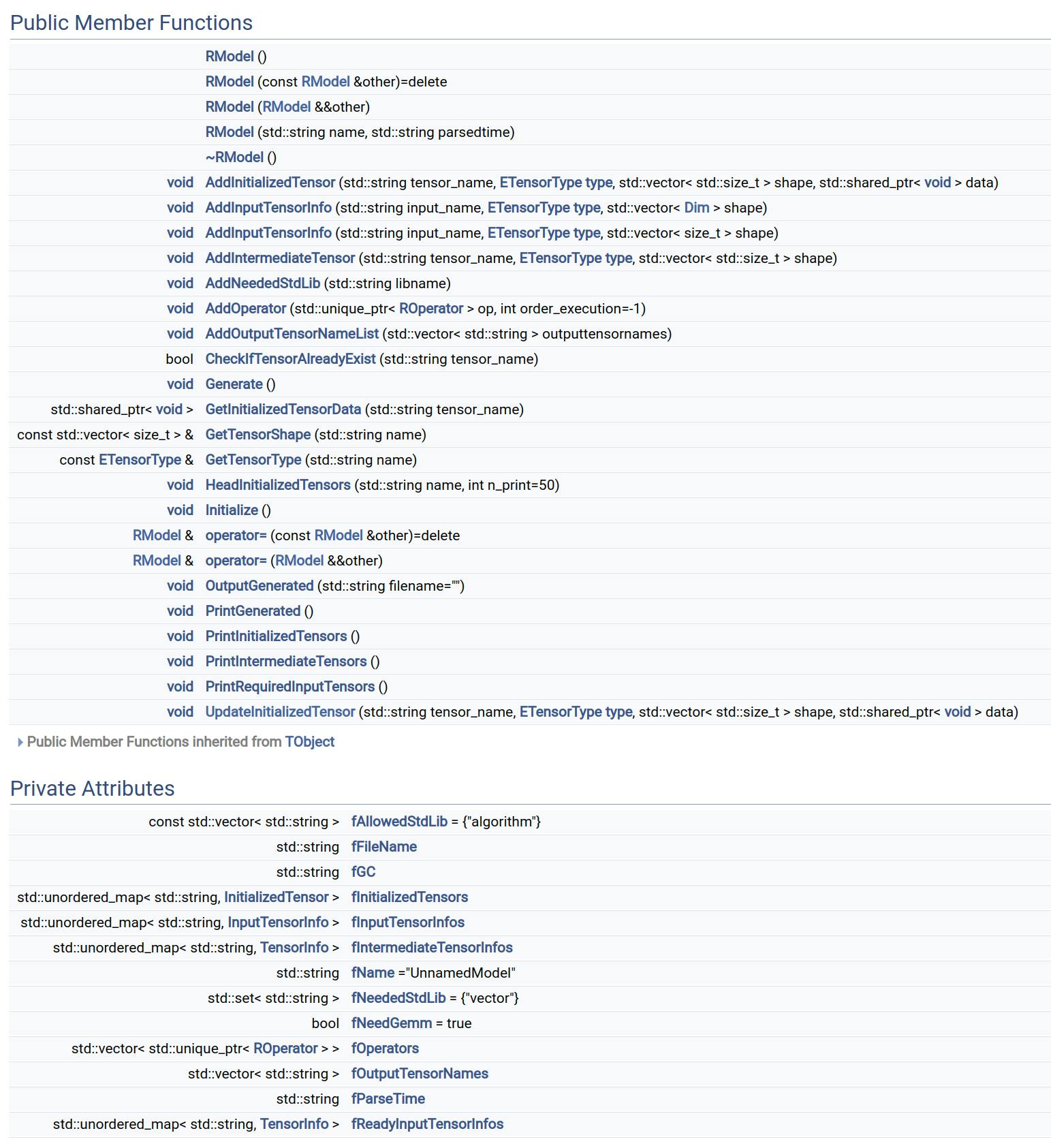

ROperators are the ONNX specified deep learning operators which are so designed to accept the incoming tensors, apply an operation or transformation on them and return the output tensors. Information about the ONNX operators can be found here

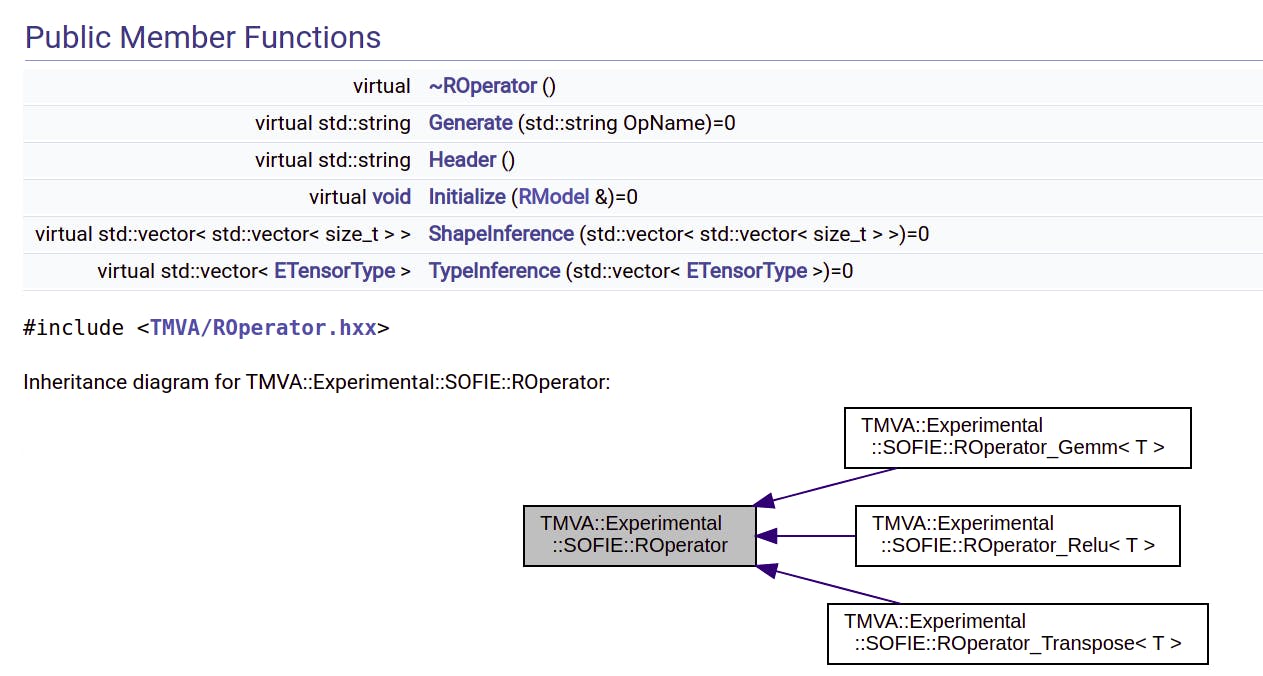

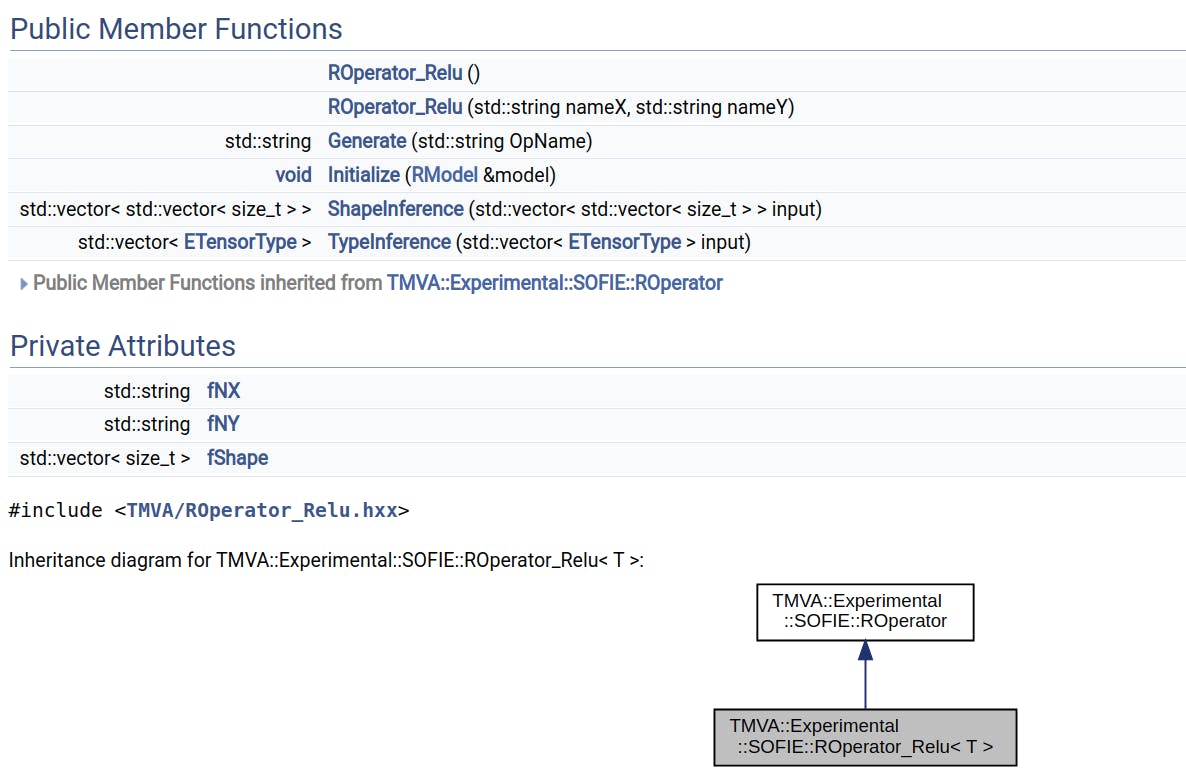

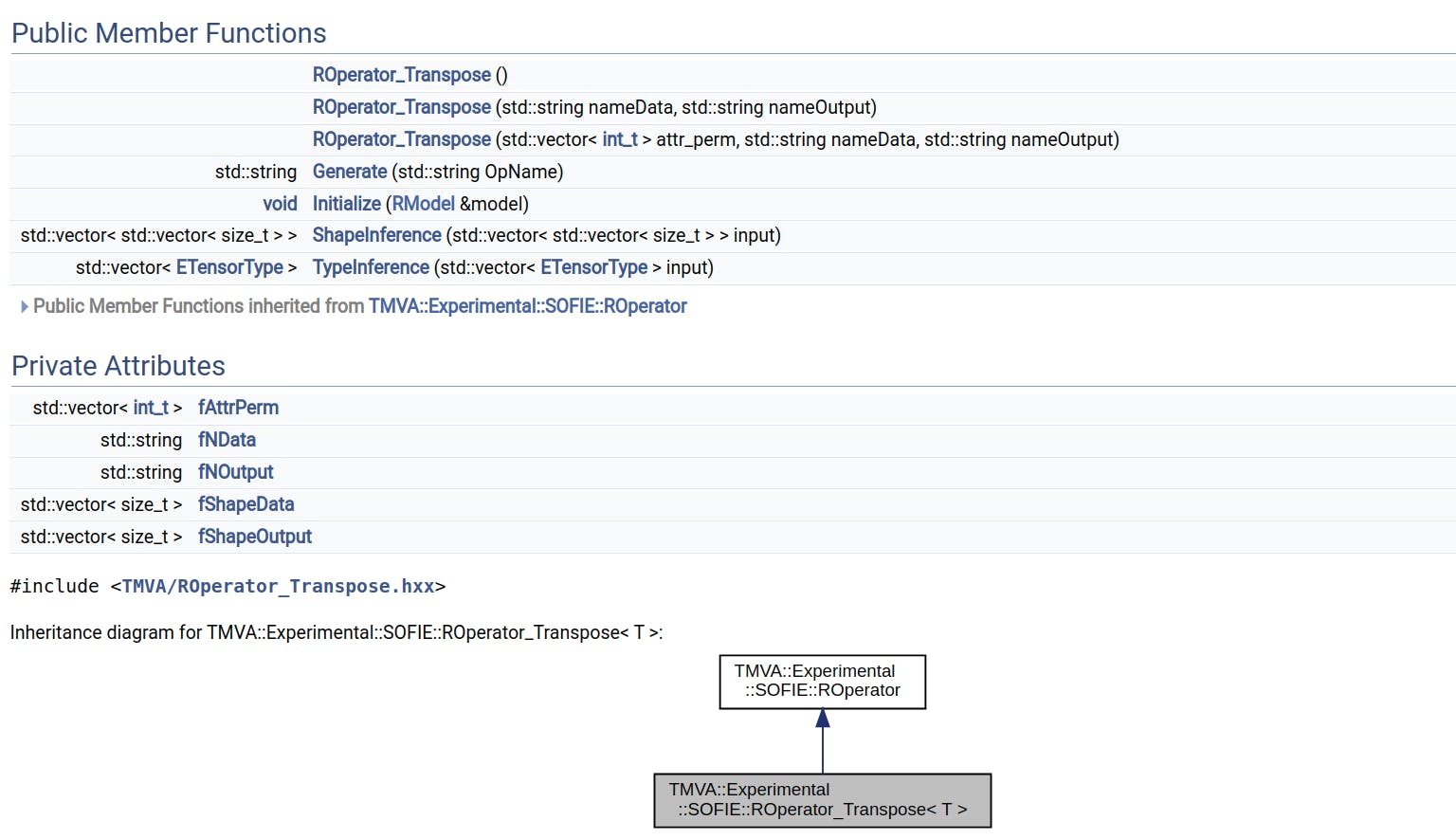

The RModel class had definitions for the Gemm, ReLU, and Transpose Operators at the beginning with developments of other operators like Convolution, Recurrent Neural Networks, GRU, LSTM, Batch & Instance Normalization going on currently.

Definitions for each of the operators are defined separately with ROperator acting as their base class. The different definitions primarily hold the individual attributes specifying the properties of the operators and their Generate() which will be called by the base classes and are responsible for generating the inference code for the specified operator with the attributes provided.

ROperator_Gemm

ROperator_Relu

ROperator_Transpose

2. Parsing Keras .h5 model

A Keras model can be built using three methods which include using the Sequential API, Functional API, and Model Subclassing. Out of the three, we were currently required to build parsers for the models built from Sequential API and Functional API only.

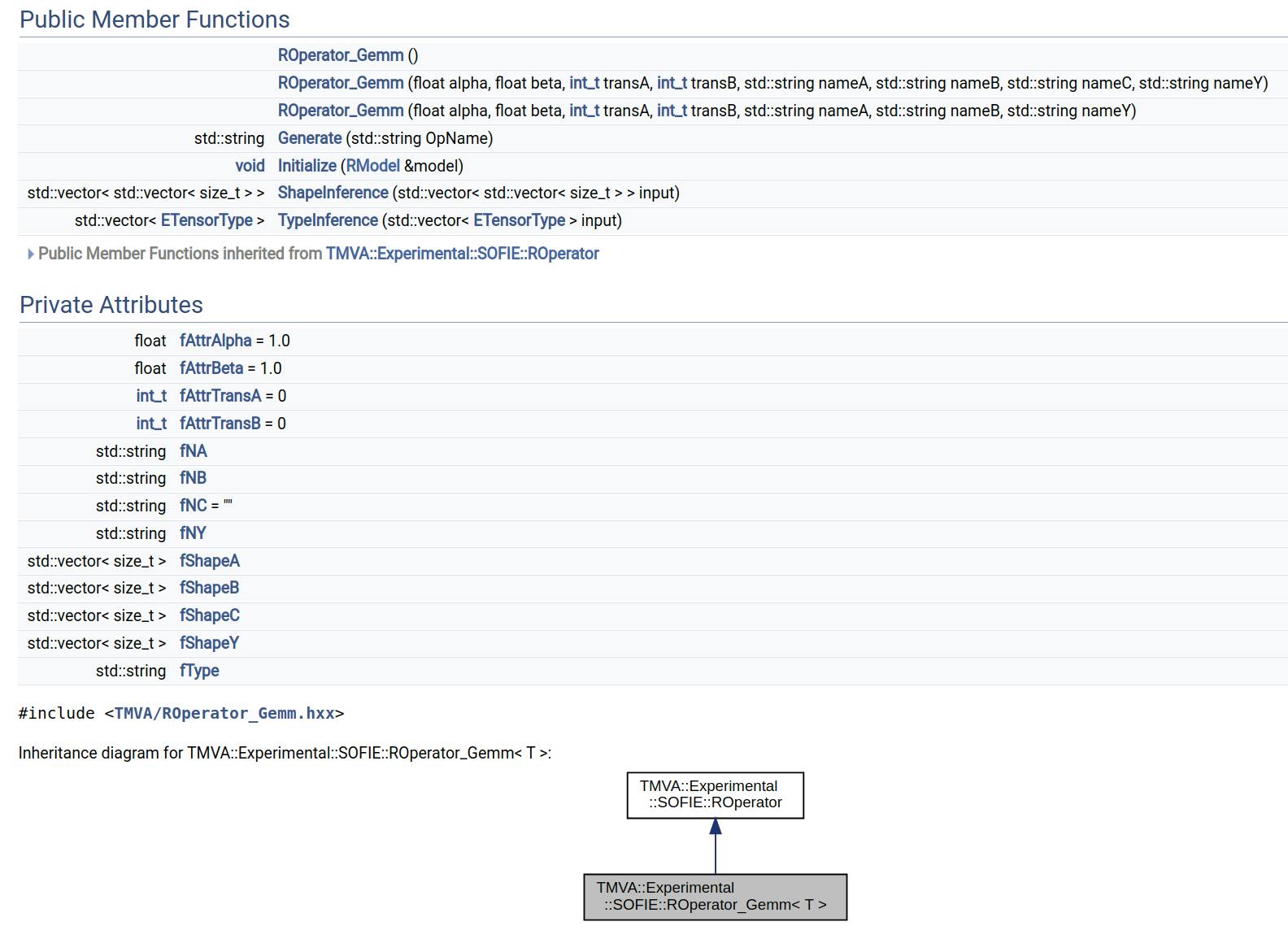

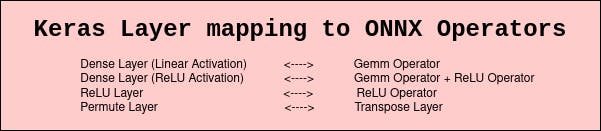

In Keras, neural network architectures are built by stacking layers of different operations which computes on the incoming tensors and subsequently provides the output to the adjoining layer. The ONNX, as well as the RModel architecture, have a graph where the nodes specify the operators and the edges specify the operands i.e. the tensors. Thus, translating the Keras layers into their analogous ONNX operators would require the following mapping.

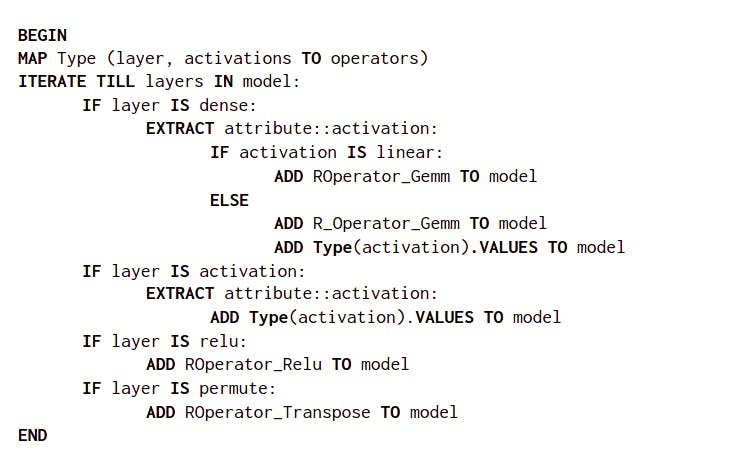

The steps to translate the Keras model into the RModel Object includes extracting the layers configuration, weights of the model, and input/output tensor info and then adding this extracted data into the RModel object using the provided functions with appropriate casting and manipulations. The algorithm used for extracting the layer information works as

1. Extracting model information

import keras

from keras.models import load_model

kerasModel=load_model(filename)

kerasModel.load_weights(filename)

modelData=[]

for idx in range(len(kerasModel.layers)):

layer=kerasModel.get_layer(index=idx)

layerData=[]

layerData.append(layer.__class__.__name__)

layerData.append(layer.get_config())

layerData.append(layer.input)

layerData.append(layer.output)

layerData.append([x.name for x in layer.weights])

modelData.append(layerData)

2. Extracting model weights (initialized tensors)

kerasModel.load_weights(filepath)

weight=[]

for idx in range(len(kerasModel.get_weights())):

weightProp={}

weightProp['name']=kerasModel.weights[idx].name

weightProp['dtype']=(kerasModel.get_weights())[idx].dtype.name

weightProp['value']=(kerasModel.get_weights())[idx]

weight.append(weightProp)

3. Extracting input and output tensor info

inputNames=kerasModel.input_names

inputShapes=kerasModel.input_shape

inputTypes=[]

for idx in range(len(kerasModel.inputs)):

inputTypes.append(kerasModel.inputs[idx].dtype.__str__()[9:-2])

outputNames=[]

for layerName in kerasModel.output_names:

outputNames.append(kerasModel.get_layer(layerName).output.name)

The development of the Keras Parser for SOFIE can be tracked in PR#8430

3. Tests

Tests for the Keras parser for SOFIE were created on the ROOT supported Google's GTest framework. Two major end-to-end tests were created to make a comparison of the predicted output tensors from the saved Keras model and the parsed Keras model. Development of tests for the parser includes implementing an EmitFromKeras.cxx file which will be used during the build to parse and generate the header files for the inference code. These header files are included in the TestRModelParserKeras.C to access the infer() which can then be used to process the input to get the output of the parsed model. The EmitFromKeras.cxx file runs Python scripts to train and save a Keras Sequential & Functional API model in a Python Interpreter session accessed using the C-Python API. The saved model is then parsed and loaded into an RModel object to generate the .hxx header files. The outputs from the Keras model and the RModel's generated inference code based on the same inputs are then compared in the Test files using GTest's EXPECT_EQ() to compare the shapes of the output tensors, and EXPECT_LE() to compare the individual values of the output tensors. The correctness of the tests is based on having the same shape of the output tensors and the difference of their individual values to be not more than 1E-6.

This marks the initial development of the Keras Parser, which requires regular updations when newer ROperators are added.

In my next blog for the GSoC'21 with CERN-HSF series, I will be introducing the PyTorch Parser for SOFIE's RModel class, which will be able to translate a PyTorch .pt file into an RModel object.

kwaheri,

Sanjiban

- Images used for the definitions of class, its data-members, and member functions for RModel and ROperators are from the official ROOT's Reference Documentation from CERN.

- Image on the neural network architecture having the input, hidden and output layers is from PyImageSearch.